Starting Point

I just decided one morning, fuck it I'm learning Machine Learning... so yeah here we are. I started with this tutorial it was really helpful for the technical side of things but in theory, I already knew somewhat how the logic behind it works.

I had little experience with Python and none with Machine Learning but after this tutorial, I gained the confidence to do something myself and the idea came immediately.

The idea - “Write like Kadare”

The idea was to feed the ML algorithm books from the famous Albanian writer 'Ismail Kadare'.

The goal was to predict the next word in the context of the previous word just like Kadare would have written it.

Kinda like an autosuggestion tool that would suggest a word, but it's Kadare suggesting it.

Creating the dataset

First, we need to clean the data and save it in an appropriate format. What we want is the data to be split in input data and output data so the algorithm can pick up on patterns and use them to predict outputs that were never taught before.

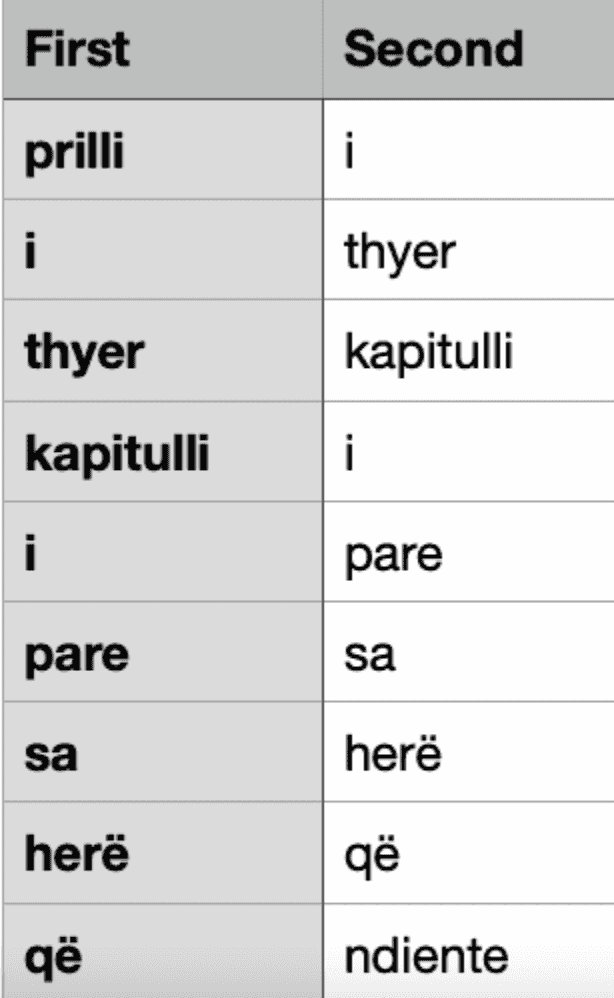

The input, in this case, is the words Kadare wrote and the output is the word that proceeds it. I got a book online written by Kadare as a text file and started removing any special characters with a simple regex and then inserted every word into an array.

import re

def get_dictionary_word_list(book):

with open(book) as f:

text = f.read()

textF = re.sub(r'[^a-zA-Z ËëÇç]', '', text).lower()

return textF.split()

data = get_dictionary_word_list("books/prill.txt")Then I wanted to have a nested array that contains every word and its successor.

Please don't judge my python because I don't have any real training or any real jobs on it so the code is mostly StackOverflow and tinkering

def chunks(lst, n):

for i in range(0, len(lst)):

yield lst[i:i + n]

data = list(chunks(data, 2))And finally, we need to parse the data into a CSV format because that's how we will feed it to the ML algorithm and we will just append the headers.

import csv

header = ['First', 'Second']

with open('prilli-i-thyer.csv', 'w', encoding='UTF8', newline='') as f:

writer = csv.writer(f)

writer.writerow(header)

writer.writerows(data)And finally it should look something like this:

The model

I decided to use the Decision Tree Classifier from scikit-learn because that's the same algorithm the tutorial guy used, it's probably not the best, maybe even the worst model for my specific problem but it's my first project with ML so I don’t care that much about mistakes but more about learning during the process.

Preparing the data

So first we need to read the CSV file we created earlier with pandas

import pandas as pd

# creating initial dataframe

book_data = pd.read_csv("prilli-i-thyer.csv")Pandas is a software library written for the Python programming language for data manipulation and analysis

And then we need to use the LabelEncoder to read the columns we have and assign numerical values to them and store them in different columns

from sklearn.preprocessing import LabelEncoder

# creating instance of labelencoder

labelencoder = LabelEncoder()

# Assigning numerical values and storing in another column

book_data['First_df'] = labelencoder.fit_transform(book_data['First'])

book_data['Second_df'] = labelencoder.fit_transform(book_data['Second'])We will drop every column except First_df so now we have the encoded input data and we will assign it to X

#The input data

X = book_data.drop(columns=['Second', 'First', 'Second_df'])The drop() function won't change our current dataset, it will create a new one without these columns and it's more useful when we have to deal with multiple columns of inputs or outputs

And now we will do the same with the encoded output data.

#The output data

Y = book_data['Second_df']Testing

We prepared the data now it's almost time to train the model but before we do that we need to make sure we are leaving a portion of the dataset for testing.

Because we want to train our algorithm but we need to check its credibility and accuracy through testing.

from sklearn.model_selection import train_test_split

# Splitting the dataset for training

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size = 0.2)Training

Now we initialize the instance of the DecisionTreeClassifier and with the method fit we pass the input data and our expected output data.

model = DecisionTreeClassifier()

model.fit(x_train, y_train)After training the model if there aren't going to be any changes to your dataset you can save the trained model and load it each time you need it like so:

joblib.dump(model, "kadare-model.joblib")

model = joblib.load("kadare-model.joblib")Anvil

Now after we trained the model we are ready to test it with some of our own input I used Anvil for the frontend first we need to establish the connection.

import anvil.server

anvil.server.connect("SCPYFTXLFIX7QJDB7GXY5CKJ-KUKPP63RW3YRUXMJ")And then right over at anvil we will add an input field and handle the change event like so:

def text_box_1_change(self, **event_args):

word_list = self.text_box_1.text.split()

result = anvil.server.call('getNextWord', word_list[-1])

self.label_1.text = resultThis will listen when the input field changes and will call our function back at our backend (to get our prediction) with the last word of the input as its parameter.

So basically when we type "Do te shkoj ne shkoll" we will only use "shkoll" to predict the next word.

Passing only the last word to the model to predict the next word is not the right way to do this, I learned it the hard way :), I will explain later the mistakes that were made during the process and how I learned from them.

Results

And finally, we will make the getNextWord function callable from Anvil and return the prediction.

@anvil.server.callable

def getNextWord(currentWord):

if(len(book_data[book_data['First']==currentWord]["First_df"]) != 0):

predictions = model.predict([ [book_data[book_data['First']==currentWord]["First_df"][:1].item()] ])

result = labelencoder.inverse_transform(predictions)

else:

result = "..."

return result

Mistakes && Conclusions

The point of the project wasn't so it will be perfect, it was more just a learning process I wanted to share with you guys.

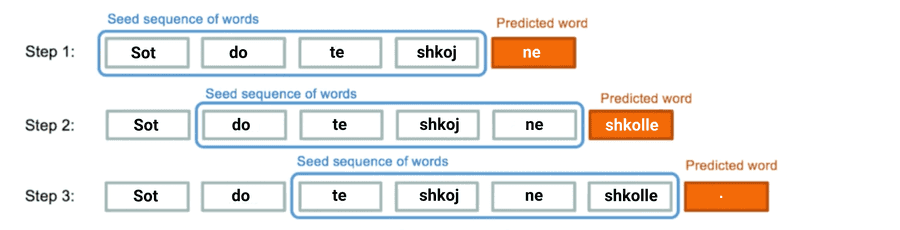

I know next time how I will do things differently one example is how we create the dataset. It's smarter to use a sequence of words that preceded the predicted word, with this logic we could create a coherent sentence and not just a autosuggestion tool, so here is a visual example: